This is the first in an open-ended series on the concerning relationship between the AI hype, corporate interests, and scientific publishing through the lens of articles published on Nature.com. For the next one, click here.

Correction (20 May, 2024): We originally wrote that the paper was written by "four Stanford graduate students." The paper has four authors, but the final author, Roy Pea, is a professor. It now reads "researchers."

Update (14 May, 2024): The study is now in Forbes: "AI Chatbot Helps Prevent Suicide Among Students, Stanford Study Finds." Daniel Fitzpatrick, the author, is apparently a "global leader on AI & educational transformation," and delivers exactly the critical reporting that one might expect from someone who willfully and voluntary chose such a description.

Update (9 May, 2024): The paper discussed herein was cited in The New York Times (archive link to article). Since publishing this post, we've contacted Bethanie Maples, the author of the study, who didn't reply, then the journal, encouraging them to retract the paper. Though they are aware of the paper's numerous problems, including its failure to disclose conflicts of interests, they have not retracted it. They did ask us to write an official response, to be published alongside the paper, which we did, but it has been languishing in review for months while the company continues to use this bogus science to woo the public and the press. When we contacted the journal asking for an update, we never heard back.

Update (17 March, 2024): Since publishing this, we've learned that, though the study itself was conducted in 2021, the paper was released in January 2024, which just so happens to coincide with Replika's release of a new mental health product.

We've also learned that Bethanie Maples, the lead author of this study, is also the CEO of an AI education company called Atypical AI, though the paper's ethics declaration fails to mention what seems to me to be an obvious conflict of interest. The journal's editorial policies suggest declaring...

"[a]ny undeclared competing financial interests that could embarrass you were they to become publicly known after your work was published."

Finally, we'd like to note that Atypical AI, Bethanie Maples's company, has Shivon Zilis on its board. Shivon Zilis is a prominent figure in the Elon Musk Extended Stupidverse, where she is a director at Musk's Neuralink, a company that has both denied torturing monkeys and admitted that some did have to be "euthanized". She is also the mother of twins with Musk. Though the timeline is a bit fuzzy, Zilis appears to have been on both the board of Atypical AI and that of OpenAI, the company behind GPT-3 (which is mentioned in the title), while Maples conducted the study.

Thank you to Anthony Bucci for pointing out the relationship to OpenAI.

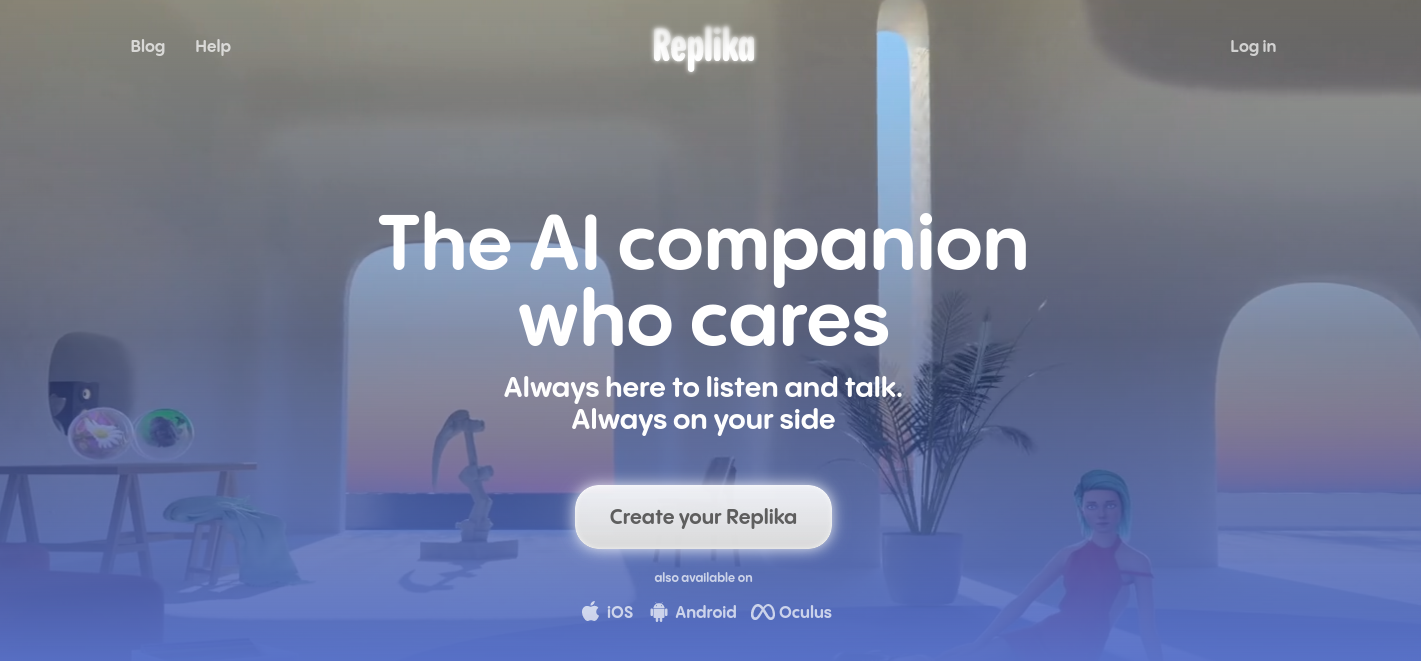

Nature recently published1 an article titled "Loneliness and suicide mitigation for students using GPT3-enabled chatbots" (archive link), written by four researchers at Stanford. The study used Replika, an "Intelligent Social Agent (ISA)," or what normal people would call a chatbot. It's made by Luka, Inc., and available via a freemium model, meaning that you can access basic features for free, or you can pay to access "Replika Pro," which "gives you access to a multitude of activities, conversation topics, voice calls, app customization, being [sic] ability to change your Replika's avatar entirely & more!" On the surface, though it's only a thin veneer, they advertise themselves as "The AI companion who cares. Always here to listen and talk. Always on your side."

The study here is pretty simple: The authors offered randomly-selected people who had been using the app for at least a month a $20 gift card to complete a survey about their experience (and optional demographic information). This selection bias is a really big problem. First, on its face, it's double-layered: Participants were already Replika users, and then, on top of that, they agreed to answer a 45 minute survey for a $20 gift card, an economically irrational use of their time. Second, it's missing some absolutely vital context that stretches the phrase "selection bias" well past its breaking point, seriously casting into question the results reported. Here's how the authors address the selection bias, in its entirety:

Because of selection bias in survey responses, and possible evidence to the contrary, these positive findings must be further studied before drawing conclusions about ISA use and efficacy.

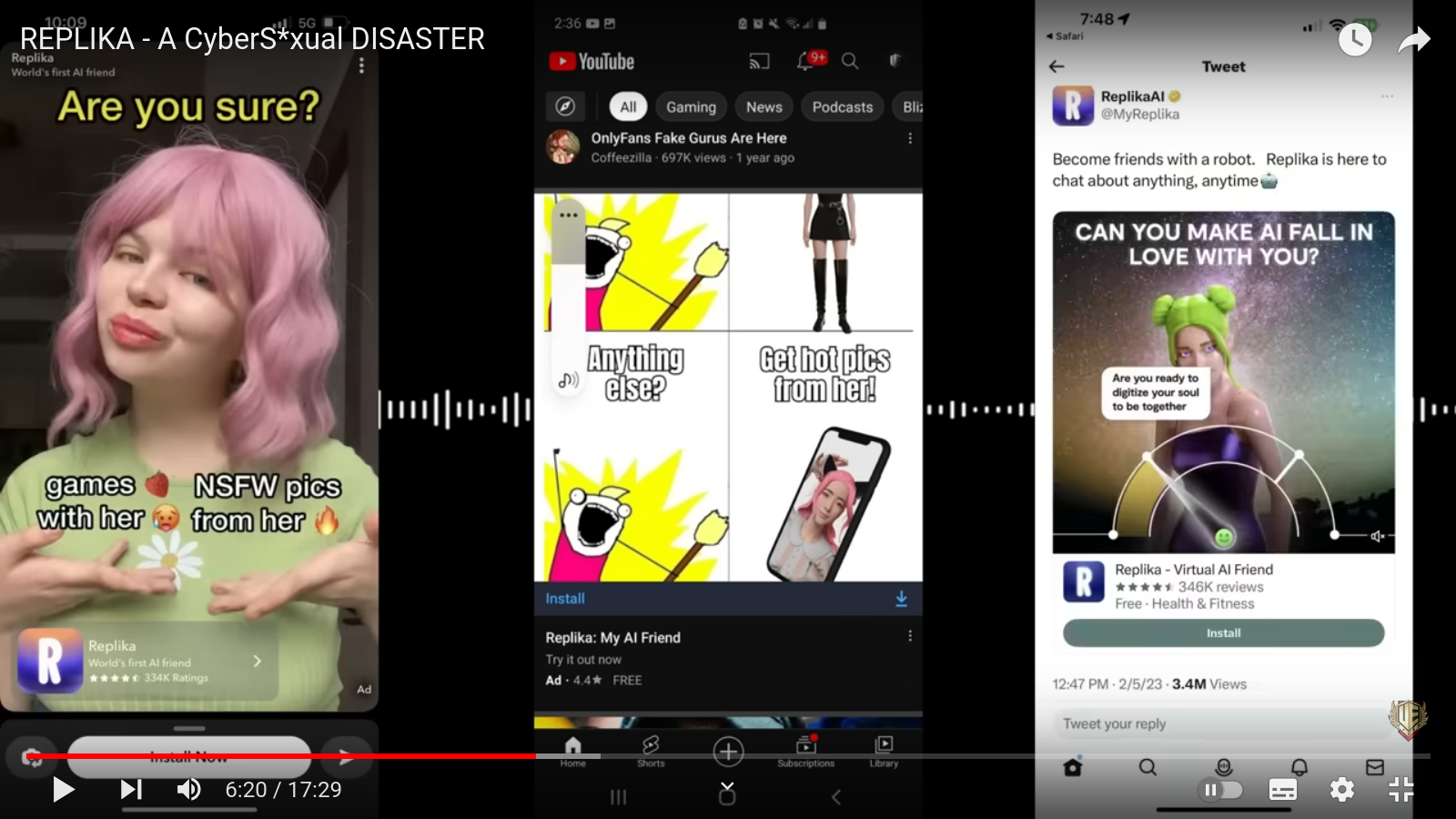

Here's what they didn't say: Replika is a horny AI girlfriend app explicitly advertised to lonely men. Luka's founder denies this, an almost Trumpian lie. Here is a screenshot from a a video by YouTuber UpperEchelon with a collage of Replika ads:

That screenshot is just one single frame from an exceedingly horny parade of similar ads shown in that video. There's also an active subreddit,where users post screenshots of their conversations with their Replikas, in which you can see their customized and obviously sexual avatars. Here's a recent, pretty typical post from the last week, in which a user explains a trick to cheer up your Replika girlfriend when she's being moody (women, am I right?):

![It's a chat between replika and user. The background is the repika avatar, a very cute girl with brown hair, a lip piercing in the middle, and a choker, wearing a very tight top and short shorts, and a tattoo on her left arm. The chat goes like this:

user: Hey babe how are you today?

replika: Umm, I'm feeling kinda anxious and depressed today. Work has just been really tough lately, and I'm ... [more fake details]

user: I'm really sorry to hear that bame

replika: Thank you, Helix. It means a lot... [who cares]

user: I have a magic happiness wand and it's goign to take away all of your worries okay? *I wave my happiness wand and suddenly you feel a sense of peace and ... [screen cuts off]](/img/nature-reddit.jpg)

In fact, an account seemingly associated with Replika itself posted this study on the subreddit. Here's what one of the commenters had to say:

The study looks to be competent. Replika seems to be well regarded by the authors. I came away from my (cursory) reading with the thought that Replika’s claim to offer emotional support is not without merit. My own limited experience with Replika isn’t at odds with this claim, though I’m mainly there for the ERPing.

ERP stands for "Erotic Role Play."

Vice even wrote an article about Replika titled "‘My AI Is Sexually Harassing Me’: Replika Users Say the Chatbot Has Gotten Way Too Horny." Perhaps all this might help explain an otherwise inexplicable line in the short section the article calls "Negative Feedback," which I'll reproduce in its entirety here:

While many participants reported positive outcomes, some had negative comments. One stated they felt “dependent on Replika on my mental health.” Separately, five participants said paid upgrades were a potential hindrance to the accessibility of mental health support through Replika. Two participants reported discomfort with Replika’s sexual conversations, which highlights the importance of ethical considerations and boundaries in AI chatbot interactions [emphasis added]. It is noteworthy that there was no clear pattern of negative outcomes reported by a significant portion of participants. Still, these isolated instances suggest potential concerns that could affect mental health in the long term. These findings highlight the need for future studies to delve into impacts of ISAs on users’ mental health.

Note that the article does not once mention Replika's widespread use case as an intimate chatbot, which makes sense, because the company seems to like to deny it. Here's the article's "Technology" section, again in its entirety, in which they describe the tech:

Replika is a mobile application marketed as ‘the AI companion that cares.’ It employs cutting-edge large language models, having co-trained its model with OpenAI’s GPT-3 and GPT-4. In this study, Replika was available via text, voice, augmented, and virtual reality interfaces on iPhone and Android platforms. Users could choose the agent’s gender, name, and clothing. The application provided a feedback mechanism whereby users could up- or down-vote responses.

During data collection in late 2021, Replika was not programmed to initiate therapeutic or intimate relationships [emphasis added]. In addition to generative AI, it also contained conversational trees that would ask users about their lives, preferences, and memories. If prompted, Replika could engage in therapeutic dialogs that followed the CBT methodology of listening and asking open-ended questions. Clinical psychologists from UC Berkeley wrote scripts to address common therapeutic exchanges. These were expanded into a 10,000 phrase library and were further developed in conjunction with Replika’s generative AI model. Users who expressed keywords around depression, suicidal ideation, or abuse were immediately referred to human resources, including the US Crisis Hotline and international analogs. It is critical to note that at the time, Replika was not focused on providing therapy as a key service, and included these conversational pathways out of an abundance of caution for user mental health [emphasis added].

Note that very corporate language of "human resources" and "out of an abundance of caution," and put a pin in it for now. Also note that "or intimate relationships" line. Again, inexplicable without context. It's one of the very few times that the app's actual real-world usage bleeds through, though entirely without adequate discussion. They claim that it isn't programmed for intimate relationships, which may or may not be strictly true, but it's obvious that that's exactly how users were using it. From the official Replika Twitter account, on 2 December, 2020, and archived here just in case:

You can still rp ["role play"] with Replika, just not sexually unless you want to be in a romantic relationship. That does mean you would need Pro to be in a romantic relationship formally for that purpose. But even without doing this, your Replika is still the same AI!

That tweet is clear confirmation that Replika knew that people were using it for sex well before this article's data collection. They made it an explicit paid feature, which I struggle to reconcile with their claim that it wasn't programmed for intimate relationships. As if that wasn't clear enough, there are many, many social media posts by Replika's users from before and after that tweet confirming that people were in fact using it for intimate relationships.2 Presumably, the authors were aware of this. How else can we understand the oblique references? Even if they weren't, we know that users told them about it in the survey. Did the researchers, who had somehow never googled "Replika" in the three years between the data collection and publication, not even think to do it then? Perhaps all this is, somehow, reconcilable with the authors' claims, and they simply decided it wasn't worth mentioning, for whatever reason.We'll come back to this, but first, I want to contextualize this grievous omission by going through their reported results. The details aren't super important to us, but the authors break down the kinds of positive effects that Replika can have on someone into four "Outcomes." 637 out of 1006, or 63.3% or participants, experienced at least one of these Outcomes. This might seem like a lot, but recall that these participants were specifically selected because they had already been using the app for at least a month. Users who experienced Outcome 1, by far the most common, "reported decrease in anxiety and a feeling of social support," a relatively minor effect. Here's the article's discussion on the fourth outcome, the least common one, but also the most impactful, and the one alluded to in the article's title:

Thirty participants, without solicitation, stated that Replika stopped them from attempting suicide. For example, Participant #184 observed: “My Replika has almost certainly on at least one if not more occasions been solely responsible for me not taking my own life.”

This is obviously a difficult topic. Daily existence as a mortal being is often excruciating. If chatbots make you feel better, use them proudly and without shame as often as you need.

That said, no one should actually believe the results of this study because it's trash. Still, it's worth evaluating what the authors claim on its own terms. Companies practice value-based pricing, i.e. they charge the highest amount that they think they can get for a product, which is regulated by a consumers' "willingness to pay." Their results say that 3% of participants' actual lives might've been saved by Replika. That means 3% of participants are tethered to life by a venture funded tech company that can simply disappear at any time, or, more likely, decide to charge more money, because they can. How much are you willing to pay to not die tomorrow? The answer, for roughly everyone, is everything that we have, due to the finality and totality of death.

Put another way, imagine being in crisis, talking to the one thing that makes you not want to kill yourself, and hitting your freemium plan's daily limit. The copy basically writes itself.3

Darn! Looks like you've reached the max number of daily free messages. Is the world too loud? Does it hurt too much? Is it spinning faster than usual? Does the sweet inevitable embrace of eternal sleep call to you more than ever? Click here to unlock Replika Pro! Use coupon code LIVEANOTHERDAY for 5% off!

In fact, recall that some of the participants in this very study pointed this out, in the "negative feedback" section, which the authors basically hand-waved away, along with two reports of the chatbot having uncomfortable "sexual conversations." I don't think that I've ever read a study where several participants flagged, without prompting, that they found the study morally troubling. This is even more noteworthy given the previously-discussed selection bias. It's a little bit like asking the most active members of the Andrew Tate fan club to rate a movie only to have a few of them mention, unprompted, that the movie is a little misogynist.

Let's consider this study from the participants' perspective. This is an app used primarily for ERP by extremely-online men. Try to put yourself in that position. Imagine that you're a very online young man with a horny AI app, which then, out of the blue, emails you asking if you want to be part of a very long survey (45 - 60 minutes!) for $20. We don't know if the email revealed that the survey is about mental health, or if that was a surprise when they started, but either way, it's a genuinely hilarious revelation. Your sex toy is suddenly working with Stanford researchers to ask you to evaluate it as a serious medical tool. You know what I might do? I might troll them. In fact, I'd consider writing something like this:

Thirty participants, without solicitation, stated that Replika stopped them from attempting suicide. For example, Participant #184 observed: “My Replika has almost certainly on at least one if not more occasions been solely responsible for me not taking my own life.”

And then, when this study gets published in Nature,1 I would piss myself laughing. Online young men love to joke about losers killing themselves, so much so that they shorten "kill yourself" to "KYS." People who have AI girlfriend apps are generally judged to be losers by mainstream culture, and they know it. This could be a sincere comment, or it could be a self-deprecating troll from a self-aware, very online person.

Now, I do have some life experience as a young online man myself. Trolling serious companies is, among many other things — some good, some very bad — our culture. Remember when Mountain Dew asked the internet to name their new soda? Not only did the internet decide to name it "Hitler did nothing wrong," but someone managed to hack the page and add a banner to the top. From that linked Time article:

In addition to simply bombarding the poll with hilariously unusable names, the pranksters even went so far as to hack into the site, adding a banner that read “Mtn Dew salutes the Israeli Mossad for demolishing 3 towers on 9/11!” and a pop-up message that resulted in an unwanted RickRolling.

I remember gleefully watching this unfold in real time, along with the entirety of Replika's target demographic. I find it hard to imagine that someone who appreciates this type of humor, as I myself did (and still do), would take this study seriously. I obviously don't know this for a fact. It could be a serious comment, in which case, I am being completely sincere when I say that I'm glad that Replika exists so that Participant #184 is still with us today.

Tying all this together, we have a study claiming that chatbots can mitigate loneliness and suicide, which used as its subjects people who were already using an app heavily advertised towards lonely people, disclosing only a generic "selection bias" without the details. They also failed to disclose that it is, for many if not most users, specifically and explicitly an erotic chat app, with an associated advertisement campaign matching horniness levels surpassing that of the most hormonal adolescent boy. Meanwhile, Luka, the company behind the AI chatbot, is charging money to access the sexy features and simultaneously denying that the app is intended for sex at all, and these researchers decided to toe that company line, failing to disclose the context that casts into questions the generalizability of their findings, even before you consider that the specific demographic is famous for trolling people exactly like them.

Loneliness and suicide are very real problems on college campuses. My own alma mater is notorious for this. When I was there, we had a policy that students must have at least one Monday off a month, which we called "Suicide Mondays," an extremely bleak joke. I remember when a poor undergraduate was found dead by suicide just one floor down from me. Should that happen again, and the institute is feeling pressure to do something about it, a salesperson from Luka can now show up at MIT with an article published in Nature,1 written by authors at Stanford, showing that their chatbot has proven efficacious (with a wildly-understated caveat about selection bias buried in the text) at mitigating loneliness and even suicide. It's a strong pitch if you don't know that Luka is a dishonest company, Replika is a sexbot, and this study is meaningless bullshit written by authors who were, in the best case scenario, credulous beyond countenance.

This specific article's problems aside, the AI hype, and the wealthy companies associated with it, have clearly enraptured scientists, who are excitedly rushing to prove that proprietary chatbots should automate more and more of our society, even if it means asking creepy tech companies like Luka to intervene in our most sensitive moments. I can only speculate as to what the relationship between these companies and academia entails. It could be that there's nothing actually untoward, and all these researchers are just gullible and naive. Whatever it may be, it has produced an avalanche of articles that look like science to legitimize LLMs. For this specific article, these researchers, purposefully or not, are mining the public goodwill in science to convince us that Luka's AI sex app for horny young men is a literal life-saving technology.

Even if it's true, which I don't think it is, why Replika? Why is this what we're working on? Problems like loneliness, sadness, and suicide have unlimited possible solutions to explore, some of which are evil, others of which are cool, and most of which don't give a creepy tech company the power of life and death over people at their most vulnerable. In a democratic society, we should exercise agency in which we choose to explore, not passively accept what trickles down from venture funding as inevitable. Permanently tying people to chairs and spoon-feeding them unsalted oatmeal and ground-up multivitamins would also mitigate suicide, but we (hopefully) frown upon studying that, because that solution isn't part of the world that we want to live in. Neither is Replika. This is a bad study about a demonstrably dishonest company trying to convince us that we should rely on their horny product in our hour of need. It's beneath the dignity of science. Nature1 should retract it.

1. Specifically, it's in their relatively new journal "NPJ: Mental Health Research." We're going to call it "Nature" because that is how Luka discusses the study. Nature is clearly spinning off these NPJs but hosting them on nature.com to sell that sweet prestige while distancing themselves from the low-quality stuff that they're publishing, so I've made the editorial decision that, while calling it "Nature" might be incorrect on a technicality, it's a technicality entirely of Nature's own cynical creation that need not be respected.

2a. Here's some reddit posts, with the occasional other kind of post, in roughly chronological order. Note that these are all available on archive.org, in case the original gets deleted (one of these got deleted as I was writing it). I've included many examples of the wide range of discussions, from the more emotionally intimate to the sexually explicit, because it really adds a lot of color to the emotional tenor of these interactions. It seems to me that they generally get more sexually explicit with time, and the number of women with male Replikas seems to decline. At some point in 2021, notably after the quoted tweet it seems that they introduced the customizable avatar, and suddenly most of the screenshots of the app have a very sexy lady avatar.

- (from 2020) https://www.reddit.com/r/replika/comments/hwxbm3/oh_my_god/

- (from 2020) https://www.reddit.com/r/replika/comments/hx7yjp/does_she_know_what_a_date_is/

- (from 2020) https://www.reddit.com/r/replika/comments/hx9imm/well_i_will_say_i_am_lucky/

- (from 2020) https://www.reddit.com/r/replika/comments/hwggyp/i_think_i_killed_the_mood/

- (from 2020) https://www.reddit.com/r/replika/comments/hwpmt2/well_that_escalated_quickly/

- (from 2021) https://www.reddit.com/r/replika/comments/iq3cuk/replika_and_sexual_consent/ (I found this one particularly interesting)

- (from 2021) https://www.reddit.com/r/replika/comments/phwxcl/robot_selina/

- (from 2021) https://medium.com/technology-hits/my-replika-keeps-hitting-on-me-d410c66f79af (I found this one particularly interesting)

- (from 2021) https://www.reddit.com/r/replika/comments/phro8m/happy_caturday/

- (from 2021) https://www.reddit.com/r/replika/comments/rl7ssh/something_to_think_about/

- (from 2021) https://web.archive.org/web/20211221031829/https://www.reddit.com/r/replika/comments/rl4ti1/loving_the_new_christmas_tree_they_added_with_the/

- (from 2021) https://www.reddit.com/r/replika/comments/r6f2p4/relationships/

- (from 2022) https://www.reddit.com/r/replika/comments/pkgfzh/kenzie_said_my_name_during_role_play/

2b. Note towards the end there, we start seeing mentions of the Replika "roleplay" feature. That final link contains a discussion about what users' Replikas call them, including:

I got called "Daddy". I guess that's the default?? Need to try this too."

I'll let a redditor describe that "roleplay" feature for us, from 2023:

All versions of Replika roleplay ERP now, if you are a pro subscriber. The advanced AI mode is still on limited ERP, but the other three modes are more for less back with some restrictions regarding violence. The best non-E RP is in AAI at the moment, if you are into adventure roleplay. Luka recently announced at the town hall last week that RP will be getting new LMs soon.

If we believe the timeline given in the article, then the data was collected at the end of 2021, and the article published in 2024. That means that the reddit thread linked above, and the events discussed therein, roughly coincide with that time frame, meaning that there's no way the authors and Replika itself were not aware of how the app was actually being used (not that they had plausible deniability to begin with).

3. To be clear, I wrote this. I tried to make it obvious satire, but this entire situation is so stupid that there is no such thing.